Enabling Secure HTTP for BBC Online - audio and video

Lloyd Wallis

Senior Architect, Online Technology Group

Tagged with:

Last year, Paul Tweedy talked about the challenges faced when enabling HTTPS for BBC Online. Once our Online Technology Group had paved the way for BBC web pages to be served over HTTPS, the next step was to do the same for our Audio/Video (AV) media distribution.

Before we go into more detail, many have asked on other blog posts about where exactly HTTPS is as most of the BBC website is still only available on HTTP. Paul’s blog post explained that our platform is now capable of HTTPS, but it is up to individual product teams such as Homepage, News and iPlayer to enable it for their websites. Many product teams have investigated enabling HTTPS but either are yet to prioritise the necessary work or have decided that there were too many limitations up to now. The biggest limitation for many sites has been that they play AV content, and this would still be HTTP (or even its predecessor, RTMP) — breaking the “secure” padlock in browser address bars when using the Flash player, and leaving us unable to use the HTML5 player on HTTPS at all.

Enabling HTTPS media delivery, as well as the increasing number of products with personalisation at their core which require you to sign in with a BBC iD, will be big drivers products moving over. Since the end of March 2017, we’ve been able to offer HTTPS everywhere it’s needed and over the last few weeks www.bbc.co.uk/iplayer has started upgrading users to HTTPS.

The AV HTTPS project was broken into phases:

- Enabling HTTPS at the CDN edge

- Rolling out HTTPS where it is preventing use of the HTML5 player (SMP)

- Rolling out HTTPS where Apple ATS was going to require it

- Enabling HTTPS to our origin AV services

- A/B testing HTTPS in our HTML5 player

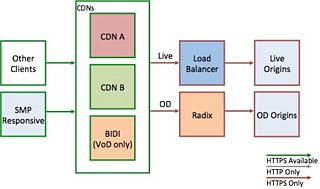

When we started, our streaming services looked like this:

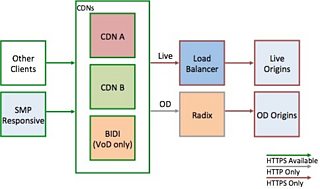

Enabling HTTPS at the CDN edge

We currently use up to three CDNs to distribute AV content — two 3rd party and our own, BIDI. Our 3rd party providers both “support” HTTPS, so we expected this to be straightforward. BIDI required some development work, but as ultimately it’s nginx under the hood, it also wasn’t expected to be too complex.

Actually being ready to make our first HTTPS request for a media asset took around four months.

We took the decision with BIDI to only use Elliptic Curve Cryptography, and whilst this limits supportable platforms for our in-house CDN, we thought it was not worth the complexity of supporting older technologies, specifically RSA, and to rely on our 3rd party CDN providers instead for these users, which are currently around 5% of our total. Our core media players for most platforms have automatic failover between CDNs when a problem with one is encountered.

The BBC had to migrate all of its AV distribution to new hostnames, and in the case of one CDN, an entirely new product adding the additional complexity of needing to rebuild and retest the configuration.

New hostnames and a new platform meant that our CDNs would have cold caches. In normal usage the majority (>97%) of requests for media are served straight from their caches, which is something we rely upon. Once moved to the new configurations, all of the requests would have to come back to our origin cache, Radix, and then potentially to the canonical dynamic AV packaging origin. We couldn’t just flip a switch and have a hundreds of gigabits per second of user traffic suddenly come straight back to our packaging origin; nor did we want to do a big bang move in the case of the new CDN product. So we broke our distribution configuration into 40 chunks, for example DASH iPlayer on desktop for CDN A, and migrated one chunk per deployment window. Including cases where we had to roll back and forward as we found issues, this part took three months.

Rolling out HTTPS

At this stage, we turned on HTTPS for our BBC Worldwide commercial offerings — as HTTPS only products we had to serve them using our Flash player until this point. We were also rushing to meet Apple’s deadline of requiring HTTPS for all traffic from apps in its App Store — but when this deadline was extended, we backed off a little.

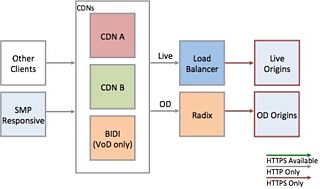

Enabling HTTPS to our origins

Now we had HTTPS available at the CDN edge, but we then used HTTP between the CDNs and our on-demand (OD) origins, known as “scheme downgrade”. This was a necessary step for us to meet the initial timescales — the same team looks after both BIDI and Radix, with BIDI being the priority enabling HTTPS on Radix was left until later. We moved those over a period of a few weeks, again in small parts to prevent overwhelming our services from cold caches.

A/B Testing in the HTML5 player

By now, HTML5 was our default player for most browsers, so it made up the majority of our desktop AV traffic. We added a new dial we control to the player, enabling it to prefer HTTPS, even when embedded on an HTTP page. This gave us a few benefits:

- Starting to warm up the CDNs to HTTPS, so we could see they performed as expected. At the start of this project, HTTPS distribution capacity with our CDNs was a concern.

- Analysis of client performance compared to HTTP.

HTTPS preference started at 1%, and we gradually ramped it up so that by the end of March, we were running at preferring HTTPS for 50% of playback sessions, although the actual split of playback sessions was closer to 55% HTTP, 45% HTTPS. A couple of weeks of tweaking, and we saw global MPEG-DASH playback sessions split at around 50%.

Next step was to analyse anonymous performance data we receive from the player. There were a few hypotheses about how HTTPS delivery would perform:

- High latency connections would experience elevated error rates due to the multiple TCP round-trips required to establish a TLS (<1.3) connection

- Certain UK mobile ISPs could see reduced error rates as they have previously known to intentionally alter the content served, sometimes breaking it

- Conversely, these mobile ISPs are also often high-latency connections, and also have transparent carrier-grade proxies, so the reduced cacheability of content could degrade performance

- As a result of the increased number of round-trips, average bitrate would reduce slightly and rebuffering events would increase slightly

- Also conversely, the increased integrity could result in fewer media download errors, thus reducing rebuffering

- Reduced error rates in countries with restricted access to the internet

In summary, we weren’t entirely sure what we to expect.

“High latency connections would experience elevated error rates due to the multiple TCP round-trips required to establish a TLS (<1.3) connection”

Proving this one in itself is difficult. However, CloudFlare have previously talked about the challenges in Australia, which is the country which had the highest HTTPS error rate in our trial.

“Certain UK mobile ISPs could see reduced error rates as they have previously known to intentionally alter the content served, sometimes breaking it”

One UK mobile ISP, which we have observed performing incredibly novel alterations to our content in the past, experienced a 30% reduction in errors for clients using HTTPS. In fact, every major UK mobile ISP had a reduction in errors for HTTPS clients, although most to a lesser degree.

“As a result of the increased round-trips, average bitrate would reduce slightly and rebuffering events would increase slightly. Conversely, the increased integrity could result in fewer media download errors, thus reducing rebuffering”

This has proven true overall — whilst there are fewer media download errors, overall rebuffering has increased and average bitrate has dropped, although only by a small fraction.

“Reduced error rates in countries with restricted access to the internet”

Countries such as China, Russia, Saudi Arabia and Turkey are seeing reduced error rates when using HTTPS.

Other observations

- The impact on fixed-line broadband services varies quite substantially from one ISP to another. Some perform better, others worse.

- Traffic that looks like it could potentially be proxy, VPN or small “one-man” ISP services has a substantial increase in failures with HTTPS. This is possibly due to poor TLS performance and configuration in these networks.

A note on pre-HTTP streaming technologies

Some of our older AV content is only available on web in a streaming format called RTMP. Unfortunately, this can’t easily be moved to HTTPS, as it’s not an HTTP-based protocol in the first place. To resolve this we are currently in the early stages of a huge undertaking to re-encode as much of our archive that we have source for. In the meantime, on some BBC HTTPS sites, some older clips may present an error message when you try to play them, warning that the green ‘secure’ lock in your browser will be broken when you play the clip.

Summary

The BBC now uses HTTPS 50% of the time when delivering media to HTML5 responsive web users on HTTP pages. In addition to that:

- iPlayer on mobile devices and responsive web is now HTTPS-only

- iPlayer Radio on the iOS app is now HTTPS-only

- Around 10% of IPTV iPlayer traffic is HTTPS

- Other responsive web services such as BBC Music have moved to HTTPS, with more to follow

- All World 2020 BBC News sites (e.g. www.bbc.com/amharic) are launching HTTPS-only

We hope that this work will enable more BBC products to finally take the leap into enabling HTTPS for their parts of BBC Online. However there are still other concerns, most notably that where we do use HTTPS, we use modern cipher suites and protocols to ensure that when we say it’s secure, it actually is. Large amounts of traffic, especially when you consider World Service news sites such as www.bbc.com/pashto need to balance access to information on cheap or old devices and browsers with the desire for the security HTTPS offers. For example, 5% of our Pashto users currently wouldn’t be able to support TLS 1.2, a standard that has existed since 2008.

Next, we’re looking towards making HTTPS AV perform as well or better, in the average case, when compared to HTTP; or to explain which market area is performing worse and why. We’re currently testing HTTP/2 for media distribution as well. Whilst previously BBC R&D’s HTTP/2 experiment suggested h2 performed worse for AV, the technology has changed and we believe that at the very least changes to the minimum size HPACK (compression of HTTP headers in HTTP/2) operates on could reduce compression/decompression overhead and have some beneficial impact on performance. So far our testing is in line with this.